2014 Canadian Society of Transplantation Annual Scientific Conference (CST ASM 2014)

Le Centre Sheraton Hotel, Montréal, Québec, February 27 - March 1, 2014

a Methods : The present research is qualitative and follows a phenomenological approach. Ten members of donor-recipient dyads were interviewed individually. Interviews were audio recorded and transcribed verbatim. Dyads included in the study are diversified (type of relationship between the donor and the recipient ; time since donation). Data were analyzed following the principles of Interpretative Phenomenological Analysis developed by Smith (2009).

Results : Results highlight the importance of the particular interpersonal and social context within which the donation took place in shaping the discourse of each donor-recipient dyad. In related dyads, the gesture offered seemed interpreted as a continuum of the role donors adhere to in a larger social context. For examples, themes of reciprocity/equality/rivalry were especially common within siblings relationships. In determined relationships, donating seemed natural and automatic and receiving was easily integrated. In contrast, when the donor-recipient relationship was non-related, meaning making was more difficult to achieve and there was no easily accessible point of reference to understand receiving.

Conclusions : Results provide in-depth information that can in turn be shared with future candidates to donation and transplantation to help them prepare for the experience and inform their decision process. Results also remind us of the importance of not only considering the physical and psychological experience of donors and recipients, but also the larger and interpersonal social context within which the donation takes place in order to derive a more accurate model of health after transplantation.

2 Department of Urologic Sciences, University of British Columbia, Vancouver, BC, Canada

3 Centre for Blood Research, Department of Pathology and Laboratory Medicine, University of British Columbia Vancouver, BC, Canada

4 Centre for Blood Research, Department of Pathology and Laboratory Medicine, University of British Columbia Vancouver, BC, Canada Department of Chemistry, University of British Columbia, Vancouver, BC, Canada

Methods. Human endothelial cell cultures were used as an in vitro model. Heart transplantation in mice was used as an in vivo model. Cell death was indicated by lactate dehydrogenase (LDH) release.

Results: Preservation of mouse hearts with HPG solution at 4oC reduced tissue damage compared to those with UW solution. In isotransplantation, transplanted hearts pre-preserved in HPG solution had a better functional recovery than those in UW solution, which was associated with lower degrees of tissue injury and neutrophil infiltration. In allotransplantation, HPG solution-preserved donor hearts survived longer than those in UW solution, indicated by the fact of that nine out of ten transplants from UW solution group failed within 24 h, while only four of nine transplants in HPG group were rejected, and three of them survived with function for 20 days in cyclosporine-treated recipients (P = 0.0175). In cultured cells, more cells survived during preservation with cold HPG solution than those with UW solution, which was correlated with the maintenance of cell membrane fluidity and intracellular adenosine triphosphate.

Conclusion: Preservation with HPG solution significantly enhances the prevention of cold ischemic injury in donor organs, suggesting that HPG solution is a promising alternative to UW solution for hypothermic storage of donor organs for transplantation.

2 Centre de recherche du CHUM Nephrology and Transplantation Division of the CHUM

3 Nephrology and Transplantation Division of the CHUM

Living kidney transplantation (LKT) offers the best medical outcomes for organ recipients. Historically, our centre had a low rate of LKT (between 10% and 20% of all renal transplantations performed). In 2009, in an effort to increase living organ donation (LOD), a dedicated team was created. Its mandate was to promote LOD at our centre and at referring centres, to coordinate assessments of living organ donors, to facilitate the process, and to ensure long-term follow-up after the donation. The aim of this study was to document the impact of this team by comparing LOD rates at our hospital from 2005 to 2008 and from 2009 to 2012.

Methods

Using our electronic database, we conducted a retrospective analysis of all living organ donors who contacted our centre from 01-01-2005 to 31-12-2008 and from 01-01-2009 to 31-12-2012. Follow-up was conducted until 01-10-2013.

Results

During the 2005–2008 period, 191 individuals interested in donating a kidney to 150 recipients contacted our centre (an average of 1.27 donors per recipient). A total of 50 renal transplantations were performed using organs from these living donors (26.2%). During the 2009–2012 period, 305 individuals (including 13 altruistic donors) interested in donating a kidney to 202 recipients contacted our centre (an average of 1.5 donors per recipient). A total of 72 (24%) renal transplantations were performed and one is planned in the next month using organs from these living donors, including 8 LKTs through the LDPE. Roughly 12.5% of the potential donors are still waiting for an assessment or are in the process of being evaluated.

Conclusion

The implementation of a dedicated LOD team increased by 59.2% the number of potential donors who contacted our centre, resulting in 46% more LKTs. These data support the creation of dedicated LOD teams to increase LKT.

Extracorporeal Membranous Oxygenation to Lung Transplantation at the University of Alberta

Methods: A retrospective study of our experience in adult and pediatric ECMO bridge to LTx was conducted between 2002-2012.

Results: A total of 350 patients received lung or heart-lung transplant in our institution between 2002-2012. One pediatric and seven adult patients were bridged to LTx with ECMO; age 4–63 years (median 24.5), three males. Primary conditions at referral were cystic fibrosis (3), primary pulmonary hypertension (1 adult, 1 pediatric), idiopathic pulmonary fibrosis (1), Eisenmenger's (1) and Wegener's granulomatosis (1). Conditions leading to ECMO were cardiac arrest (1, pediatric), hemoptysis/pulmonary hemorrhage (3), respiratory failure (4). One patient had 4 days ECMO before a second double LTx for graft failure. Days on ECMO pretransplantation were 1-40 days, median 3.5. Types of ECMO used were venoarterial (7 VA) and venovenous (1 VV). All patients were mechanically ventilated at the time of ECMO. A 4-year-old pediatric patient was on VA ECMO for 40 days (35 days on a semiambulatory VA ECMO). Transplant types include five double lung, one single lung, and two heart-lung transplants. Donor height mismatch was -27 to +15 cm. Hyperoxia test pO2’s on donors were 333-497 mmHg, median 413. Ischemic time was 166-711 minutes, median 354. Total cardiopulmonary bypass (CPB) time during transplant was 240-494 minutes, median 263. The pediatric patient was back on CPB for 24 minutes post implantation and the chest was left open for five days. One adult patient required reexploration and the chest was closed on day-3. Two adult patients required tracheostomy. One adult patient died on day-1 following a single lung transplant for Eisenmenger's syndrome. Seven patients survived to hospital discharge after 23-100 days: time on ventilator 22-1200 hours (median 216), ICU stay 6-18 days, 1 cardiac arrest (pediatric) and completely recovered, 1 renal failure requiring dialysis and 6 (75%, adult and pediatric) survived at one year post LTx.

Conclusions: ECMO to LTx can be successful. In our experience patients bridged from ECMO to single LTx and urgent re-LTx have poor outcome

2 Institute of Cardiovascular Sciences, St. Boniface Research Center, University of Manitoba, Winnipeg, Canada

3 Anesthesia and Perioperative Medicine, St. Boniface Hospital, University of Manitoba, Winnipeg, Canada

4 National Research Council Institute for Biodiagnostics, Winnipeg, Manitoba

5 Cardiac Surgery, Mazankowski Alberta Heart Institute, University of Alberta, Edmonton Canada

Ex vivo heart perfusion has been proposed as a means to resuscitate non-utilized donor hearts and expand the pool of organs available for transplant. However, a reliable means of demonstrating myocardial functional recovery and organ viability prior to transplantation is required. Therefore, we sought to identify metabolic and functional parameters that were predictive of myocardial performance during ex vivo heart perfusion.

Methods:

Six normal pig hearts (220±13 grams) and 8 donation after circulatory death hearts (244±13 grams) were procured and perfused ex vivo at 37 oC with a donor blood-STEEN solution (hemoglobin concentration of 45 g/L). Hearts were transitioned from Langendorff mode into a working heart mode for assessments after 1, 3, and 5 hours of ex vivo perfusion. Myocardial performance was determined by measuring the cardiac output indexed to heart weight at a left atrial pressure of 8 mmHg and an aortic diastolic pressure of 40 mmHg. Myocardial functional parameters were assessed using a conductance catheter placed in the left ventricle. Metabolic function was assessed by measuring myocardial oxygen consumption and lactate production. Linear regression with stepwise selection analysis was performed to determine which metabolic and functional parameters best correlated with myocardial performance.

Results:

The minimum rate of pressure change (dP/dtmin) was the best functional predictor of myocardial performance (R2=0.915), while the isovolumic relaxation time (Tau; R2=0.780), maximum rate of pressure change (dP/dtmax; R2=0.621), preload recruitable stroke work (PRSW; R2=0.566), end-diastolic pressure volume relationship (EDPVR; R2=0.226), and end-systolic pressure volume relationship (ESPVR; R2=0.144) correlated to a lesser degree. Myocardial oxygen consumption (R2=0.745) was the best metabolic predictor of myocardial performance, while lactate metabolism failed to demonstrate any correlation (R2=0.004). The combination of the dP/dt minand myocardial oxygen consumption was the most reliable predictor of myocardial performance (R2=0.937).

Conclusions:

The combination of the dP/dtmin and myocardial oxygen consumption produced the most reliable assessment of myocardial performance during ex vivo heart perfusion. Further studies are required to determine thresholds of these parameters that predict successful transplantation.

2 Faculty of Medicine and Dentistry, University of Alberta

Lung transplantation remains the only treatment for advanced end-stage lung disease from a variety of etiologies. A profound lack of donor organs remains the greatest challenge in providing lung transplantation, with stagnant rates of lung transplantation at many large centers. As more patients are being referred for lung transplantation, there is a growing rate of deaths on the recipient waitlist.

CASE DESCRIPTION:

A 65-year-old female with pulmonary fibrosis who had been on the recipient waitlist for over 2 years was the prospective recipient. She had recently deteriorated and was in imminent need for mechanical ventilation. The donor lungs were procured from a 69-year-old female who had been in a massive motor vehicle accident. This event had caused multiple contusions and a parenchymal laceration leading to air leak requiring wedge resection. Consequently, the best PO2 challenge gas (ie. P/F ratio) was only 267.

DISCUSSION:

These lungs were chosen given the recent deterioration of the prospective recipient. The ex-vivo normothermic lung perfusion (EVLP) run lasted over 10.5 hours, making it the longest successful clinical EVLP case of very marginal lungs ever completed in Canada thus far. Lung function parameters were continuously monitored throughout the run. The donor lungs met acceptable P/F ratios and as such were transplanted into the recipient. After 2 months in hospital the recipient was successfully discharged home where she continues to function well 6 months post-transplant.

CONCLUSION

This case report adds to the growing literature on the value of EVLP, as ex-vivo perfusion was possible with successful clinical transplantation after 10.5 hours on the Lung Organ Care System (OCS) system. Furthermore, the fact that these were severely marginal lungs is an encouraging advance in increasing our limited donor lung pool and may ultimately lead to improved rates of transplantation for our growing recipient waitlists of patients requiring lung transplantation.

2 Department of Physical Therapy, Faculty of Medicine, University of Toronto, Toronto, Ontario, Canada Respiratory Medicine, West Park Health Centre, Toronto, Ontario, Canada

3 Department of Physical Therapy, Faculty of Medicine, University of Toronto, Toronto, Ontario, Canada Lung Transplant Program, Toronto General Hospital, University Health Network, Toronto, Ontario, Canada

4 Lung Transplant Program, Toronto General Hospital, University Health Network, Toronto, Ontario, Canada

5 Department of Physical Therapy, Faculty of Medicine, University of Toronto, Toronto Sunnybrook Research Institute, Sunnybrook Health Sciences Centre, St. John’s Rehab Program, Toronto Respiratory Medicine, West Park Health Centre, Toronto

Methods : A retrospective chart review was conducted on individuals who received either a single, double LT, or heart-lung transplant at our centre between 2006 and 2009. The following data were extracted: pre-transplant diagnosis, age at transplant, pre-transplant exercise capacity measured by six-minute walk distance, and other data pertaining to patient demographics, clinical characteristics, and healthcare utilization. LT recipients were categorized based on discharge destination into either a “home” (HG) or “rehabilitation” group (RG) for analysis.

Results : Medical charts of 243 patients were identified, 197 (81%) were discharged home, 42 (17%) were discharged to inpatient rehabilitation, and four (2%) were discharged to an ‘other’ destination. The median age of the HG was lower (53 years, IQR=38-61) than the RG (57 years, IQR=50-64), p<0.05. The HG had a shorter median post-transplant intensive care unit length of stay (4 days, IQR=15-28) compared to the RG (56 days, IQR=43-88), p<0.001. The RG had lower median baseline six-minute walk distance (245 m; IQR=172-338) compared to the HG (329 m, IQR=242-413), p=0.001. Using chi-square analysis, individuals with CF and COPD were found to be more likely to be discharged home whereas the ILD, PAH, and ‘other’ groups were more likely to be discharged to rehabilitation (p < 0.001).

Conclusion : The present study identified pre-transplant diagnosis, age at time of transplant, pre-transplant functional exercise capacity, and post-transplant intensive care unit length of stay to be factors affecting discharge destination following LT. The identification of these factors has the potential to facilitate early discharge planning and optimize continuity of care.

2 Division of Allergy and Clinical Immunology,The Hospital for Sick Children, Department of Pediatrics, University of Toronto, Toronto, Canada.

3 Division of cardiology, The Hospital for Sick Children, Department of Pediatrics, University of Toronto, Toronto, Canada.

4 Division of Nephrology, The Hospital for Sick Children, Department of Pediatrics, University of Toronto, Toronto, Canada.

Methods: The study cohort included all children (<18 years) who underwent liver, heart, kidney or intestinal transplantation at a pediatric tertiary medical center between 2000-2012, with a follow-up period of 6 months or more post transplant. Patients with a pre-transplant history of allergy or autoimmunity were excluded.

Results: 273 patients (111 liver recipients, 103 heart, 52 kidney, and 7 multiple organs) with a median follow-up period of 3.6 years were included in the study. A total of 92 (34%) patients developed allergy or autoimmune disease after transplantation with a high prevalence among liver (41%) and heart transplant recipients (40%) compared to kidney recipients (4%; P<0.001). Post-transplant allergies included eczema (n=44), food allergy (22), eosinophilc gastrointestinal disease (11) and asthma (28). Autoimmunity occurred in 20 (7.3%) patients, presenting mainly as autoimmune cytopenia (n=10). In a multivariate analysis, female gender, young age at transplantation, family history of allergy, EBV infection and elevated eosinophil count more than 6 months post transplantation were associated with an increased risk for immune dysregulation. Two patients (0.7%) died from autoimmune hemolytic anemia and in 50 patients (18%) the allergy or autoimmunity did not improve overtime.

Conclusions: Allergy and autoimmunity after solid organ transplantation are common in pediatric liver and heart recipients. Allergy and autoimmunity pose a significant health burden on transplant recipients and suggest a state of immune dysregulation post transplant.

2 Institut de Recherche en Immunologie et Cancérologie (IRIC), Université de Montréal

3 CHUL Research Center/CHUQ

4 Université de Montréal

5 Biotechnology Research Institute, Montréal

MV released by apoEC were analyzed by small particle flow cytometry (spFACS) and purified by sequential centrifugation from serum-free medium conditioned by apoEC. Electron microscopy (EM) and differential proteomic MS/MS analyses were performed on apoptotic MV. Aortas from female BALB/c mice were transplanted to fully MHC-mismatched female C57Bl/6 mice in absence of immunosuppression. Purified donor or recipient apoptotic MV were injected intravenously post-surgery every other day for 3 weeks and recipients sacrificed 3 weeks post-transplantation.

Two groups of MV released downstream of caspase-3 activation by apoEC were identified by spFACS and EM: apoptotic bodies (≥800nm) and apoptotic nanovesicles (≤100nm). Proteomic analysis revealed strikingly different protein profiles in apoptotic nanovesicles vs bodies. LG3 (c-terminal fragment of perlecan) was highly enriched in apoptotic nanovesicles. To evaluate the immunogenic potential of apoptotic MV, aortic allograft recipients were injected with apoptotic nanovesicles, apoptotic bodies or vehicle. Injection of apoptotic nanovesicles generated from either the donor or the recipient strain significantly increased anti-LG3 IgG titers, compared to both control groups, demonstrating a specific and alloindependent immune response. Recipients injected with apoptotic nanovesicles also showed increased neointima formation and infiltration with CD3+ cells.

Collectively these results identify apoptotic endothelial nanovesicles as a novel inducer of humoral responses leading to increased anti-LG3 production and accelerated vascular rejection.

2 Programmes de bioéthique de l'Université de Montréal

3 Centre de recherche du CHUM, Service de néphrologie du CHUM

Kidney transplant recipients in the O blood group are at a disadvantage when it comes to kidney exchange programs (KEPs), since they can only receive organs from O donors. A way to remedy this situation is through altruistic unbalanced paired kidney exchange (AUPKE), where a compatible pair consisting of an O donor and a non-O recipient is invited to participate in a KEP. The aim of this study was to gather empirical data about health professionals’ views on AUPKE.

Methods

A total of 19 transplant professionals working in 4 Canadian transplant programs, and 19 non-transplant professionals (referring nephrologists and pre-dialysis nurses) working in 5 Quebec dialysis centres took part in semi-structured interviews between 11/2011 and 06/2013. The content of these interviews was analyzed using a qualitative data analysis method.

Results

Respondents’ recommendations focused on: (i) the logistics of AUPKE (e.g., not delaying the transplant for the compatible pair; retrieving organs locally; providing a good quality organ to the compatible pair; maintaining anonymity between pairs); (ii) medical teams (e.g., promoting KEPs within transplant teams; establishing a consensus among members; fostering collaboration between dialysis and transplant teams); (iii) the information provided to compatible pairs (e.g., ensuring that information is neutral); (iv) research (e.g., looking into all transplant options for O recipients; studying all potential impacts of KEPs and AUPKE); and (v) resources (ensuring there are sufficient resources in the system to manage the increased number of renal transplants performed). Transplant professionals were particularly concerned about the information provided to compatible pairs, whereas non-transplant professionals were mostly concerned about the lack of benefits for compatible pairs.

Conclusion

The results of this study can be used to develop future guidelines for the implementation of an AUPKE program in Canada. It will also be important to take into account the views of other stakeholders, such as patients and potential donors, to ensure the appropriate implementation of AUPKE.

Methods: An anonymous questionnaire was administered to all thirty-one final-year Canadian urology residents at the Queen’s Urology Examination Skills Training program (QUEST). The survey was devised to assess urological involvement and resident exposure to renal transplantation. Responses were closed ended and utilized a validated five-point Likert scale. Descriptive statistics and Pearson’s chi-squared test were used to analyze the responses and demonstrate correlations.

Results: All residents completed the survey. Urologists were involved in performing renal transplant surgery at most training centers across Canada (77.4%). The majority of residents believed that urology should remain highly involved with transplant (77.4%), and that it should be a mandatory component of residency training (64.5%). There was a positive correlation between the involvement of urology in renal transplantation at a resident’s training centre, and the opinion that urology should continue to play an important role in this field (r=0.51, p=0.003). However, barely half of the residents (51.6%) felt they had sufficient exposure to transplant surgery. Only 41.9% would feel comfortable performing transplant surgery after residency, and these residents were involved in an average of 30 transplant surgeries and 16 laparoscopic donor nephrectomies. A minority of residents had plans for fellowship training (9.7%) or future careers (12.9%) involving renal transplant.

Conclusion: Renal transplantation remains a limited component of the majority of residency training programs in Canada. However, the number of residents intending to pursue fellowship training or a future career that involves transplant remains limited. Consequently, a strong exposure to renal transplant during urology residency training is vital to ensuring urology remains highly involved in renal transplantation.

2 Department of Pediatrics, University of Alberta, Edmonton, AB; Alberta Transplant Institute, Edmonton, AB

3 Department of Pediatrics, University of Alberta, Edmonton, AB; Alberta Transplant Institute, Edmonton, AB; Department of Surgery, University of Alberta, Edmonton, AB.

Methods / Results: Two different protocols were used to expand human peripheral Tregs: Tregs were stimulated with anti-CD3/28-coated beads or artificial antigen presenting cells (APCs) that express human CD58, CD86 and the human CD32 Fc receptor to immobilize soluble anti-CD3 mAbs. We consistently achieved the highest Treg expansion with artificial APCs. This condition was further optimized by the use of serum-free OpTMizer T cell Expansion Medium, resulting in over 100-fold expansion after 14 days. These culture conditions were similarly effective at expanding thymic Tregs which in comparison to peripheral Tregs retained a significantly higher proportion of FOXP3+ cells. To compare their suppressive function in vivo, we established a humanized-mouse model of GVHD which involves irradiation of immunodeficient NSG mice, followed by injection of 10x106 PBMCs. After ~2 weeks, human T cells engraft, and the mice lose weight and show clinical signs of GVHD. NSG mice will next be injected with PBMC in the absence or presence of different ratios of expanded peripheral or thymic Tregs. Clinical GVHD scores will be monitored and upon sacrifice, flow cytometry and histology will be performed to quantify the relative effectiveness of peripheral versus thymic Tregs.

Conclusion: We have developed optimized expansion conditions for peripheral and thymic Tregs and established a humanized-mouse model to test their function in vivo. Comparison of the potency of Tregs from peripheral blood and thymuses will reveal whether thymuses are a suitable source for continued development of Treg cell-based therapy.

We exposed WI-38 human fibroblasts to serum free medium, a classical inducer of autophagy, for up to 4 days. Serum starved fibroblasts showed increased LC3 II/I ratios and decreased p62 levels, confirming enhanced autophagy. This was associated with myofibroblast differentiation characterized by increased expression of α-smooth muscle actin (αSMA), collagen I, collagen III and formation of stress fibers. Inhibiting autophagy with three different PI3KIII inhibitors (3-MA, wortmaninn, LY294002) or through Atg7 silencing prevented differentiation. Autophagic fibroblasts showed increased expression and secretion of Connective Tissue Growth Factor (CTGF) and CTGF silencing prevented myofibroblast differentiation. Phosphorylation of the mTORC1 target P70S6kinase was abolished in starved fibroblasts. Phosphorylation of Akt at Ser473, a mTORC2 target, was reduced after initiation of starvation but was followed by spontaneous rephosphorylation after 2 days of starvation, suggesting mTORC2 reactivation with sustained autophagy. Inhibiting mTORC2 activation with long-term exposure to rapamycin or by silencing rictor, a central component of the mTORC2 complex, abolished Akt rephosphorylation. Rictor silencing and treatment with rapamycin both prevented CTGF and αSMA upregulation, demonstrating the central role of mTORC2 activation in CTGF induction and myofibroblast differentiation. Finally, inhibition of autophagy with PI3KIII inhibitors or Atg7 silencing blocked Akt rephosphorylation.

Collectively, these results identify starvation-induced autophagy as a novel activator of mTORC2 signalling leading to CTGF induction and myofibroblast differentiation.

Methods: Using a porcine transplant model, liver grafts were either stored for 10hr at 4°C (CS, n=5) or preserved combining a total of 7hr cold storage plus 3hr SNEVLP (33°C, n=5). To simulate a clinical sequence including graft transportation and recipient hepatectomy time, SNEVLP was performed in between two series of cold storage of 4hr and 3hr respectively. Parameters of hepatocyte (AST, INR), endothelial cell (Hyaluronic Acid, CD31 immunohistochemistry), Kupffer cell (beta-Galactosidase), and biliary (alk. Phosphatase, Bilirubin) injury and function were determined. 7 day survival was assessed.

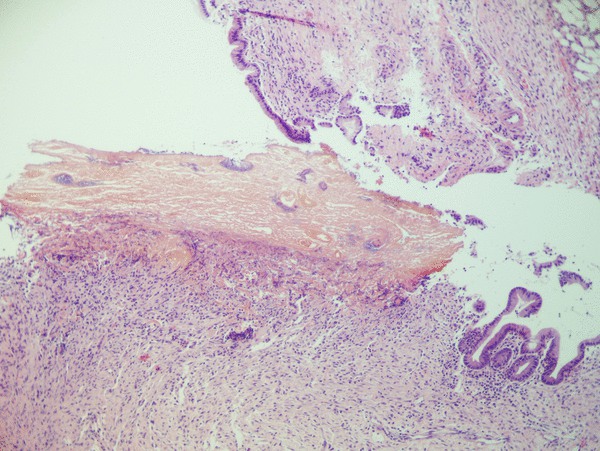

Results: 7 day animal survival was similar between both CS and SNEVLP groups (40 vs 80%, p=0.8). No difference was observed between CS and SNEVLP groups regarding maximum INR (1.7 vs 2, p=0.9) or maximum AST within 48hr (2500±1100 vs 3010±1530 U/L, p=0.3). In contrast, 7hr after reperfusion the CS vs SNEVLP group showed 5-fold higher Hyaluronic Acid levels (4195±2990 vs 737±450 ng/ml, p=0.01) indicating decreased endothelial cell function in the CS group. Beta-Galactosidase levels as marker of Kupffer cell activation were 2-fold higher in CS vs SNEVLP pigs (166±17 vs 95±25 U/mL, p<0.01). CD31 staining of parenchymal biopsies at 8hr after reperfusion demonstrated severe endothelial cell injury in the CS group only. 3 days after transplantation CS vs SNEVLP groups had higher alk. Phosphatase (179±9 vs 80±21 mcmol/L, p≤0.05) and Bilirubin levels (20±22 vs 6±2 mcmol/L). Bile duct histology at time of sacrifice revealed severe bile duct necrosis in 3 out of 5 animals with CS (picture 1), while no bile duct injury was observed in SNEVLP treated animals (picture 2).

picture 1 (H&E, x10)

picture 2 (H&E, x10)

Conclusion: SNEVLP preservation of DCD grafts reduces bile duct and endothelial cell injury following liver transplantation. Intermitted SNEVLP preservation could be a novel and clinically applicable strategy to prevent ITBL in DCD liver grafts with extended warm ischemia times.

2 Biotechnology Research Institute, Montreal, QC, Canada

3 Vanderbilt University School of Medicine, Nashville, TN, USA

4 ETH Zürich, Basel, GE

5 Institut de Recherche en Immunologie et Cancérologie (IRIC), Université de Montréal, Montréal (Québec) Canada

Objective: We aimed to evaluate whether LG3 regulates the migration and homing of mesenchymal stem cells (MSCs) and favors the accumulation of recipient-derived neointimal cells during rejection.

Methods and Results: We used a pure model of TV where mice are transplanted with a fully-MHC mismatched aortic graft followed by intravenous injection of recombinant LG3. Increased neointimal accumulation of α-smooth muscle actin (SMA) positive cells was observed in LG3-injected recipients. When green fluorescent protein (GFP)-transgenic mice were used as recipients, LG3 injection favored neointimal accumulation of GFP+ cells, confirming the accumulation of recipient-derived cells within the allograft vessel wall. Recombinant LG3 increased horizontal migration and transmigration of mouse and human MSC in vitro and enhanced ERK 1/2 phosphorylation. Neutralising β1 integrin antibodies in MSC in vitro or use of MSC from α2 integrin-/- mice (deficient in α2β1 integrins) led to decreased migration in response to recombinant LG3 and significantly decreased ERK 1/2 phosphorylation. To assess the importance of LG3/α2β1 integrin interactions in LG3-induced neointima formation, α2-/- mice or wild-type mice were transplanted with an allogeneic aortic graft followed by intravenous LG3 injections for 3 weeks. Reduced intima-media ratios and decreased numbers of neointimal cells showing ERK 1/2 phosphorylation were found in α2-/- recipients.

Conclusion: These results highlight a novel role for LG3 in neointima formation during rejection. LG3, through interactions with α2β1 integrins on recipient-derived cells leading to activation of the ERK 1/2 pathway, favors the accumulation of recipient-derived αSMA positive cells to sites of immune-mediated vascular injury.

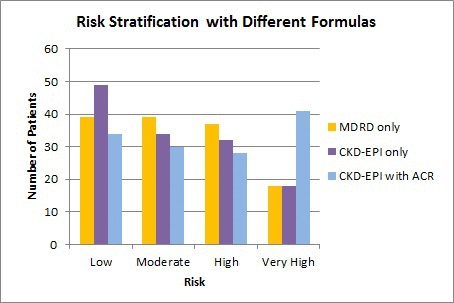

Methods: This is a cross-sectional study of prevalent kidney transplant patients. The variables were serum creatinine, eGFR (MDRD and CKD-EPI equations, ml/min/1.73m2), and ACR (mg/mmol). The number of patients in each 2012 KDIGO eGFR stage (G1 > 90, G2 = 60 – 89, G3a = 45 – 59, G3b = 30 – 44, G4 = 15 – 29, and G5 < 15 ml/min), ACR stage (A1 < 3.0, A2 = 3.0 - 30, A3 > 30 mg/mmol) and risk category (low, moderate, high, very high) were calculated.

Results: The patients were 133 subjects, mean age 54.6 years, 30% female, 0.7% African Canadian, 26 % diabetic, median transplant age 9.7 years, 35 % living donor. Compared to MDRD, CKD-EPI classified subjects to the same stage in 85.8%, to a less severe stage in 13.5%, and to a more severe stage in 0.7%. For risk stratification, compared with eGFR alone, incorporating ACR increased the number of patients at “very high” risk from 14 to 31 % and overall, 47 % of patients moved to a higher risk group.

Conclusions: This study suggests switching from MDRD to CKD-EPI equation has minimal impact on staging and that a large proportion of kidney transplant patients may be at “very high” risk for important clinical outcomes when eGFR and ACR are considered. The main limitation of this study is that the 2012 KDIGO risk stratification system has not been validated in kidney transplant patients, an area to be addressed by future studies.

2 Western University

Methods: Perfusate samples were collected immediately after the kidney was removed from the pump from DBD (n=9) and DCD (n=4) donors. After a purification process to remove the starch from the perfusates, two-dimensional gel electrophoresis was performed on each sample. Protein expression was analysed using PDQuest measurement software. Spot levels that correlated with each type of donor were identified and then sent for mass spectrometry.

Results: Three spots that were significantly associated (p<0.05) with DCD donors emerged from the analysis and they were identified as fatty acid binding protein, Apo lipoprotein, and proapolipoprotein.

Conclusion: Although previously these biomarkers were noted in renal ischemia-reperfusion injury, we have identified them here as released prior to reperfusion. The known role of these proteins in lipid peroxidation suggests that this may be a part of mechanism of injury to DCD kidneys and may hint at a potential target for pharmacological intervention, which could be applied while the kidney is being cold perfused.

2 Ochsner Medical Center

Background: Adolescents with solid organ transplants (SOT) demonstrate high rates of medication non-adherence and higher rates of graft loss compared to all other age groups. Self-management interventions encompass information-based material designed to achieve disease-related learning and changes in the participant’s knowledge and skill acquisition, while providing meaningful social support. These interventions have had some success in chronic disease populations by reducing symptoms and promoting self-efficacy and empowerment. Using findings from a needs assessment, we developed three modules (Diet, Medication, and Lifestyle) of an Internet-based self-management program for youth with SOT. This program contains information, graphics, peer experiences and self-management strategies. The purpose of this study was to determine the usability and acceptability of the online program from the perspectives of youth with SOT.

Methods : Participants were recruited from SOT clinics at one large pediatric tertiary care centre in Canada. Three iterative cycles (seven patients per each iteration) of usability testing took place to refine the website prototype. Study procedures involved finding items from a standardized list of features and communicating any issues they encountered, followed by a semi-structured interview to generate feedback about what they liked and disliked about the program.

Results : 21 post transplant teens,(mean age 15, 7 female) found the website content to be trustworthy; they liked the picture content and found the videos of peer experiences to be particularly helpful. Teenagers had some difficulties finding information within sub-modules and suggested a more simplistic design with easier navigation.

Conclusions and Future Direction: This web-based intervention is appealing to teenagers and may foster improved self-management with their SOT. Nine additional modules are being developed and will undergo usability testing before it is finalized. In the future, a randomized control trial will determine the feasibility and effectiveness of this online self-management program on adherence, graft survival and quality of life.

2 Montreal Children's Hospital, McGill University Hospital Center

3 Hôtel-Dieu, Centre Hospitalier de l’Université de Montréal

4 Hôpital Notre-Dame, Centre Hospitalier de l’Université de Montréal

5 Hôpital Saint-Luc, Centre Hospitalier de l’Université de Montréal

Methods To better portray the evolution of MRSA-colonized lung transplant recipients, we conducted a 4-year retrospective observational study of our cohort of patients colonized with MRSA, analyzing their outcomes in the first year following lung transplant

Results Of the 128 lung transplantations carried out in our facility over 4 years, 23 were patients colonized with MRSA, a prevalence of 18%. Of these 23 patients, 6 died within the first year after transplant, a one-year survival rate of 74% in this subgroup. This MRSA-colonized cohort’s intensive care unit average length of stay was of 11 days (1-134, median 5 days) and the average hospital length of stay was of 31 days (13-134, median 22 days). This cohort of MRSA-colonized patients had a hospitalization rate of 0.82 per patient-year, with 30% of patients accounting for all hospitalizations. A total of 71 respiratory infections were treated over 1 year of follow-up, including 21 (30%) MRSA infections. There were 9 (39%) patients who developed reperfusion injury after the transplant and 9 (39%) patients who developed bronchial stenosis during follow-up. Only 5 cases of biopsy-proven acute rejection and 1 case of bronchiolitis occurred during the first year after transplant of our cohort. All deaths were attributable to complicated pneumonias, 50% of which were caused by MRSA.

Conclusion In the first year after lung transplant, our cohort of MRSA-colonized patients showed a high rate of respiratory tract infections with only a minority due to MRSA, as well as a survival rate comparable to the general lung transplant population.

2 Partner Family Health Care Centre, Toronto Western Hospital, University Health Network, Toronto CA

Two self-administered questionnaires on the primary care management of KTR were developed and implemented. One survey investigated the perspectives of KTRs at an urban transplant centre on PCP performance, comfort level with their PCP, support received for self-management, and barriers to ideal care. The second survey targeted PCPs of KTR assessing their attitudes and practice patterns towards similar domains in addition to their communication with transplant centres.

A total of 502 patients and 209 physicians completed the survey (response rate of 77% and 22%, respectively). The majority (76%) of patients indicated that a PCP was involved in their care. Patients felt comfortable receiving care for non-transplant related issues, vaccinations, and periodic health examinations from their PCP. Similarly, PCP felt comfortable providing such care. While only 23% of patients felt uncomfortable with their PCP managing their immunosuppressive medication, the majority (75.3%) of PCP felt uncomfortable. PCP tend to rate their support for patient self-management better than that reported by patients. 73% of physicians responded that they were currently providing care to KTR. The majority of physicians specified that they rarely (57%) or never (20%) communicate with transplant centres. PCPs’ most commonly stated barriers to delivering optimal care to KTR were insufficient guidelines provided by the transplant centre (68.9%) and lack of knowledge in managing KTR (58.8%). The resources suggested by PCP to improve their comfort level in managing KTR were written guidelines and continuing medical educational activities related to transplantation.

Our results suggest that there is insufficient communication between the transplant centres, PCP, and patients. The modes for the provision of resources needed to bridge the knowledge gap for primary care physicians in the management of such patients needs to be further explored.

2 The Hospital for Sick Children Physiology and Experimental Medicine; Kinesiology and Physical Education, University of Toronto

3 The Hospital for Sick Children Health Evaluative Sciences

Purpose : To objectively determine PA and fitness levels and, to examine potential correlates of PA in children post-LTx.

Methods : 20 children (7 males, mean age 14.2 yrs ± 2.2) > 1 year post-LTx (mean 10.1 ± 4.3 yrs) were studied. Peak oxygen consumption (VO2peak) was measured through graded cardiopulmonary cycle ergometry. Muscle strength, endurance and flexibility were assessed via the Fitnessgram®. Moderate to vigorous PA (MVPA) and steps/day were determined with an Actigraph (GT3X) accelerometer worn for 7 consecutive days. Questionnaire measures included: Children’s Self-Perceptions of Adequacy in and Predilection for Physical Activity Scale, Pediatric Quality of Life Multidimensional Fatigue Scale and Physical Activity Perceived Barriers and Benefits Scale. All measures were compared to normative values for healthy children.

Results : VO2peak was low (mean 33.3 ± 7.5 ml/kg; 76.9 ± 15.5%predicted) and participants took less steps per day (mean 6790.6 ± 2941.8 compared to healthy children (11, 220 steps/day). MVPA (23.7 ± 9.6 minutes/day) was accumulated at moderate intensity only and no subjects met national recommended PA guidelines. Six participants (30%) attained the healthy fitness zone for abdominal strength and 1 participant (5%) for pushups ( Fitnessgram® criterion measures). Fatigue (69.5 ± 14.9) and self-efficacy (56.6 ± 9.5) were lower than reported levels in healthy children and similar to several other chronic disease populations. Most commonly reported perceived barrier to PA was “I am tired.” A positive correlation was shown between self-efficacy and MVPA (r=0.59, p=0.016) and self-efficacy and fatigue (r=0.51, p=0.025).

Conclusion : Children post-LTx show below normal levels of PA and VO2peak and their perceived fatigue is a common barrier to PA. Self-efficacy correlates with fatigue and MVPA. Further investigation into the potential mediating effects of these correlates is warranted to guide development of innovative and effective PA intervention strategies in order to maximize long-term health outcomes in children post-LTx.

Objective: To compare cardiovascular risk scores by both formulae in our cohort of stable RTR.

Methods: A cross-sectional chart review was undertaken of 270 consecutive RTR transplanted from 1979 - 2012. High risk MACE score was defined at ≥20%. Standard statistical analyses including multivariate analysis (MVA) and stepwise analysis were performed.

Results: Data was collected between Jan 2011- Aug 2013. Mean transplant duration was 9.51±6.65 yrs. Mean eGFR by isotope dilution mass spectrometry (IDMS) was 59.19±28.26 ml/min. 46.3% had eGFR above 60 mL/min. Mean FRS score was 16.11% ±13.41. 41.5% were classified as high risk. 34.4% and 47.6% of patients with eGFR higher and lower than 60 mL/min respectively had high FRS. UVA showed statistical insignificance (p=0.261) between eGFR and FRS. In the MVA, FRS correlated significantly with age (p=0.000), body surface area (p=0.001), body mass index (p=0.018), and TC: HDL ratio (p=0.000).

Mean MACE score was 14.80% ±15.32. 24.8% were classified as high risk. 11.2% and 36.6% of patients with eGFR above and below 60 mL/min respectively had high MACE scores.. In the MVA, MACE scores correlated significantly with age (p=0.000), and eGFR (p=0.001). Stepwise analysis revealed an eGFR contribution to MACE score= {-9.781ln(eGFR) + 44, p < 0.01}.

Conclusions: eGFR contributed significantly to MACE scores but not FRS. The higher FRS over MACE scores suggests that traditional Framingham variables contribute more to the total score compared to the impact of diminished transplant eGFR. We suggest a prospective validation study.

2 University of Calgary

3 Division of Nephrology, University of British Columbia, St. Paul's Hospital

4 Division of Nephrology, University of Toronto, Toronto General Hospital

Adult living kidney donors from 3 Canadian transplant centres completed validated QOL (WHOQOL-BREF) and HS (Short Form-36 Health Survey) questionnaires at time of (baseline) and 6 months post-nephrectomy (follow-up). QOL and HS summary scores were calculated at baseline and follow-up, stratified by domain and donor type (directed versus non-directed/paired KPD). Scores between different donor types and between all donors and Canadian norms were compared using a t-test.

Among 94 donors (n=68 directed; n=6 non-directed and n=20 paired), QOL domains were similar between donor types at baseline and follow-up. Baseline and follow-up HS scores were similar between donor types except for lower social functioning in directed donors at baseline (p=0.03) (Table 1). Compared to Canadian norms, living kidney donors reported superior HS in all domains at baseline (p<0.01) and in 6 domains (P<0.05) at follow-up. Living donors were similar to Canadian norms for physical role limitations and energy/fatigue at follow-up (p>0.08).

QOL and HS are similar between directed and non-directed or paired exchange donors and HS is superior for living donors in almost all domains compared to Canadian norms. These findings support the expansion of the practice of paired exchange and non-directed kidney donation in Canada.

2 University of Saskatchewan

3 Saskatchewan Transplant Program

4 College of Medicine, University of Saskatchewan; Saskatchewan Transplant Program

Objective: To investigate whether MACE scores correlate with inflammatory chemokine levels in our RTR.

Methods: The MACE calculator was used to calculate the 7-year probability of CVE in 101 RTR. Forty-four immuno-inflammatory markers were measured by Luminex technique. Statistical analyses included stepwise analysis after a multivariate determination of significant demographic and inflammatory variables.

Results: The mean predicted risk of a CVE was 14.8% ± 15.4 [95% CI 12.9 – 16.7]. In the univariate analysis, MACE scores correlated significantly with age, HbA1c, serum creatinine, urea, eGFR (as measured by IDMS) systolic blood pressure, serum phosphate, thrombopoeitin (TPO), chemokine ligand (CCL) 2,5,11, vascular endothelial growth factor (VEGF) and granulocyte colony stimulating factor (G-CSF) (p<0.05). After multivariate analysis, however, only age, serum creatinine, eGFR and TPO remained significant (p<0.05). Similar to previous analysis the FRS demonstrated lack of significance with all inflammatory markers and eGFR.

Conclusion : Contrary to the FRS, the MACE score is associated with levels of inflammation, suggesting its relevance in RTR. TPO emerged as potential marker for future CVE in RTR. Prospective study is warranted to investigate the impact of these findings and to determine whether MACE more accurately predicts CVE.

Canadian Society of Transplantation (CST) Members’ Views on Anonymity in Organ Transplantation

2 School of Social Work, Faculty of Community Services, Ryerson University

3 The Department of Thematic Studies - Gender Studies Linköping University

4 Department of Psychiatry, University Health Network, University of Toronto

Methods: This study involved the electronic distribution of an eighteen-item survey to the CST membership, specifically asking respondents to consider the possibility and implications of open communication and contact between organ recipients and donor families. Respondents were also given an opportunity to elaborate with written comments.

Results: Of the 541 CST members surveyed, 106 replied (20%), with a completion rate of 57%. Among these respondents, 70% felt that organ recipients and donor families should only communicate anonymously, while 47% felt that identifying information could be included in correspondence between consenting recipients and donor families. 53% thought that organ recipients and donor families should be allowed to meet should they be interested in doing so; however, 27% of respondents were against this, and 20% neither agreed or disagreed with them meeting. With the advent of social media facilitating communication, 38% of respondents felt that a re-examination of current policies and practices concerning anonymity in transplantation is necessary.

Conclusions: Further research and discussion concerning the views of organ recipients and donor families on the mandate of anonymity is both timely and relevant, and may influence future policy.

2 Department of Medicine University of Manitoba

3 Department of Pathology Emory University

Method: Nine patient sera with well characterized (using IgG single antigen bead (SAB) assay) post-transplant DSA were tested for C1q binding using the standard C1qScreenTM assay and two modified protocols: 1) wash modified (WM) protocol and 2) anti-human globulin (AHG) C1q enhanced (ACE) protocol. The number of class I/II DSA detected and DSA MFI values obtained with each protocol were compared.

Results: The C1qScreenTM assay was positive for only 30% of Class I (9/30) and 52% of Class II (15/29) IgG DSA specificities. The average MFI DSA values were markedly reduced compared with the IgG-SAB assay. The WM protocol exhibited higher average DSA MFI values (3.4 fold) compared to the standard protocol but only 2 additional DSA specificities were detected. In contrast, the ACE protocol identified 10 additional Class I (19/30; 63%) and 4 additional Class II (19/29; 66%) DSA specificities with an average increase in DSA MFI of 4.7 fold when compared with the standard C1qScreen TM assay.

Conclusions: The C1qScreenTM assay exhibits suboptimal assay sensitivity and fails to adequately identify many post-transplant complement fixing HLA DSA. The sensitivity of the C1qScreenTM assay to detect DSA can be improved with protocol modifications including introduction of wash steps and/or AHG enhancement. The ACE protocol in particular was the most sensitive of the protocols tested and detected the majority of post transplant HLA DSA. Future studies will investigate the clinical significance of HLA DSA detected by the ACE protocol.

2 Department of Laboratory Medicine and Pathology University of Alberta

3 Department of Medicine University of Alberta

Ten sera from highly sensitized post-transplant patients with well characterized HLA antibodies (IgG single antigen bead (SAB) assay) were tested using C1qScreenTM and the ACE protocol. CDC and CDC-AHG crossmatches were performed using 1W60 LCT panels. Actual PRA values for CDC and CDC-AHG LCT were calculated (#Pos reaction/#Valid reactions) for each serum and were compared to the predicted PRA values based on C1q positive HLA antibody specificities.

PRA predicted based on antibody specificities identified with the ACE assay correlate well with CDC-AHG PRA (mean difference = 2.9%, range = -1.7-8.6%) but not with CDC PRA (mean difference = 26.2%, range = 7-48%). PRA predicted based on C1qScreenTMresults correlatereasonable well with CDC PRA (mean difference = -4.8%, range = -39.7 – 17.2%) but not with CDC-AHG PRA (mean difference = -28%, range = -62 – 5%). Importantly, only 6/383 (1.6%) positive CDC-AHG reactions were not predicted by the ACE assay results. In contrast, a significant proportion of positive CDC reactions, 49/240 (20.4%), were predicted to be negative by standard C1qScreenTM suggesting poor assay sensitivity.

There is a good correlation between the CDC-AHG PRA and predicted PRA based on ACE assay HLA antibody test results. This suggests that the ACE assay may be used to predict CDC-AHG crossmatches.

2 Liver Unit, University of Alberta, Edmonton, Alberta, Canada

3 Centre de Researche du CHUM, Hôpital Saint Luc, Montreal, Quebec, Canada

4 Multi-Organ Transplant Program, Dalhousie University, Halifax, Nova Scotia, Canada

5 British Columbia Transplant Program, Vancouver, British Columbia, Canada

6 Transplant Centre, Institute for Clinical and Experimental Medicine (IKEM), Prague, Czech Republic

7 Department of General, Visceral and Transplant Surgery, Hannover Medical School, Hannover, Germany

8 Policlinico di Tor Vergata, Rome, Italy

Methods : Patients received: Arm 1: tacrolimus QD (initial dose: 0.2mg/kg/day), Arm 2: tacrolimus QD (0.15-0.175mg/kg/day) plus basiliximab; Arm 3: tacrolimus QD (0.2mg/kg/day delayed to Day 5) plus basiliximab. All patients received MMF (IV 3-5 days then oral) and a single bolus of corticosteroid. Primary analyses (full-analyses set; FAS): eGFR (MDRD4) at Week 24. Secondary endpoints (per-protocol set) included graft and patient survival, and acute rejection (AR). Mortality rates were calculated using the safety-analyses set.

Results : 901 patients randomized; FAS: 295, 286 and 276 in Arms 1-3, respectively (23, 17, 17 from Canada). Baseline characteristics were comparable with mean baseline eGFR of 90.6, 89.3, 89.9 mL/min/1.73m2 in Arms 1-3, respectively. Mean tacrolimus QD trough levels were initially lower in Arm 2 vs 1 and 3; by Day 14, levels were comparable between arms and remained stable. Week 24: eGFR was higher in Arms 2 and 3 vs 1 (76.4 and 73.3 vs 67.4 mL/min/1.73m2; p<0.001 and p<0.047, respectively; ANOVA), and eGFR was numerically higher in Arm 2 vs 3 (p=ns); renal function was preserved in all arms (Figure). Kaplan-Meier estimates of graft survival in Arms 1-3: 86.5%, 87.7% and 88.6% (p=ns; Wilcoxon-Gehan); patient survival: 89.3%, 89.1% and 90.4% (p=ns); and without AR: 79.9%, 85.7% and 79.6% (Arm 2 vs 1: p=0.0249, Arm 3 vs 1: p=ns; Arm 2 vs 3: p=0.0192). Overall mortality rate: 5.1% and mortality rate for males vs females: 5.8% and 3.6%, respectively. AEs were comparable between arms, with a low incidence of diabetes mellitus and no major neurologic disorders.

Conclusion : An initial low dose of prolonged-release tacrolimus (0.15-0.175mg/kg/day) plus MMF and induction therapy (without maintenance steroids) had better renal function and a significantly lower incidence of AR over 24 weeks vs the other regimens. There were no advantages observed with delaying the initiation of tacrolimus QD.

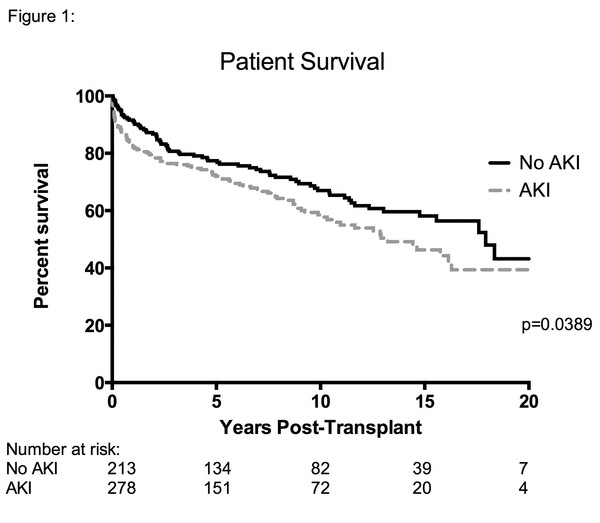

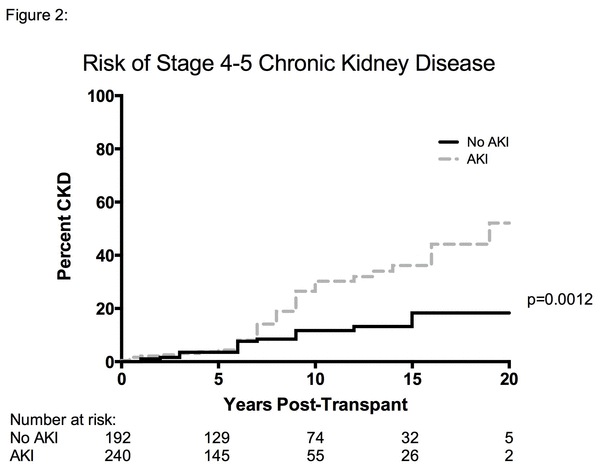

Purpose : In our cohort study, we examined the impact of AKI on long-term patient (pt) survival and on the incidence of stage 4 and 5 CKD.

Methods: We studied 491 OLT recipients at a single center between 01/1990 and 08/2012, and pts were followed for up to 20 years. We identified 278 pts (56.6%) with AKI defined as either an increase in serum creatinine (SCr) ≥26.5 µmol/L within 48-hr or elevation in SCr 1.5X above baseline within 7 days (KDIGO criteria).

Results: In a multivariable Cox proportional hazards model, survival was worse in pts with AKI (HR: 1.46, 95% CI: 1.08-1.96, p=0.014, Figure 1). The median survival time was 13.2 yrs for pts with AKI, and 17.9 yrs in pts without AKI. Severe (stage 3) AKI was associated with worse pt survival (HR: 2.29, 95% CI: 1.46-3.58, p=0.001), while AKI stages 1 and 2 were not statistically different. The risk of developing stage 4-5 CKD was also higher in pts with AKI compared to non-AKI pts (17.5% vs. 9.1%) with a HR of 2.28 (95% CI: 1.30-4.00, p=0.004, Figure 2).

Conclusions: Our findings suggest that AKI after OLT is associated with poor long-term outcomes, including worse pt survival and higher incidence of CKD stage 4-5. Strategies to prevent and manage OLT pts with AKI need to be developed.

2 Department of Surgery, Multi-Organ Transplant Program, McGill University Health Center.

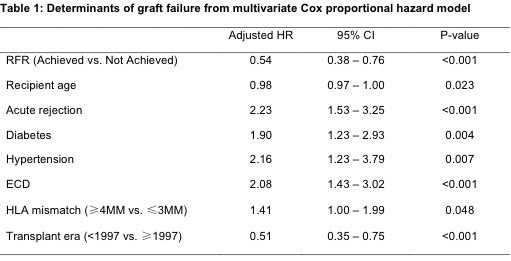

AIM: To calculate renal function recovery (RFR) based on recipient and donor eGFR, and to evaluate the impact of RFR on long-term death censored graft survival (DCGS).

METHODS: We studied adult deceased donor kidney transplant (KTx) recipients transplanted between January 1990 and June 2012. The last donor serum creatinine prior to procurement was used to estimate donor eGFR. The predicted eGFR was calculated as donor eGFR/2. The recipient eGFR was calculated using the average of the best three eGFR values observed during the first 3 months post-KTx. The abbreviated MDRD equation was used to estimate both donor and recipient eGFR. Renal function recovery (RFR) was defined as the achievement of predicted eGFR as determined by the donor eGFR. Recipients who achieved RFR were compared to those who did not, with DCGS as the primary outcome.

RESULTS: 1023 KTx were performed during the study period, of which 705 were studied after exclusion for missing data, living donors and combined transplants. The predicted eGFR was achieved in 57% of patients, and 138 graft failures (20%) occurred during the follow-up period. Recipients who achieved the predicted eGFR had significantly better DCGS compared to those who did not (HR for graft failure 0.57, 95% CI 0.40-0.79, P=0.0008, Figure 1). ECD kidneys, recipient age, diabetes, hypertension, HLA-mismatch, acute rejection and transplant era were also significant determinants of graft survival in a multivariate model (Table 1).

CONCLUSION: Recovery of predicted eGFR based on donor eGFR correlates with improved DCGS in KTx recipients.

2 University of Calgary

3 University of Saskatchewan

2 Department of Pathology, Lyman Duff Medical Sciences Building, McGill University, Montreal, QC, Canada

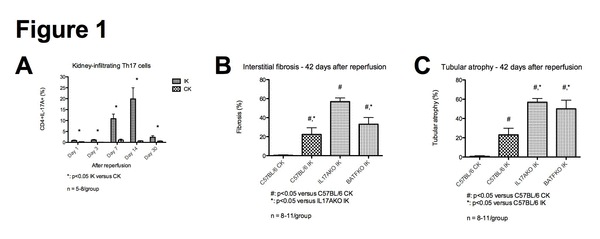

Methods: Wild-type (WT), IL-17AKO, and BATFKO (Th17 lineage deficient) mice on a C57BL/6 background underwent unilateral renal pedicle clamping for 30 minutes, followed by reperfusion for up to 42 days. Injured (IK) and control (CK) kidneys were digested in collagenase, and lymphocytes isolated by density gradient. Phenotypic analysis of Th17, Th1, Th2, and Treg cells was performed by flow cytometry. IF/TA was quantified by a blinded pathologist on H&E and Masson’s Trichrome kidney sections.

Results: CD4+IL-17A+ Th17 cells progressively infiltrated the IK but not the CK in WT mice, peaking at 14 days after reperfusion (Fig. 1A). The majority co-expressed the Th17-specific transcription factor RORgt, and co-secreted IL-17F. At 42 days after reperfusion, significant IF/TA developed in the IK of WT mice, while the CK was IF/TA-free. In comparison to WT mice, IL-17AKO had worse IF/TA, while deficiency in both IL-17A and F (BATFKO) only worsened tubular atrophy (Fig. 1B-C). IL-17F alone did not explain worse fibrosis as its expression in IL-17AKO was reduced compared to WT mice. There was no difference in the expression of IFN-γ (Th1), IL-4 (Th2), or FoxP3 (Treg) by kidney-infiltrating CD4+ T cells after reperfusion between WT and KO mice.

Conclusion: Following ischemia-reperfusion injury, there is a long-lasting infiltration of Th17 cells in the IK. Contrary to evidence in heart and lung transplant models, a defect in the Th17 pathway is fibrogenic after renal AKI, and worsens tubular atrophy. Targeting the Th17 pathway to reduce acute damage after AKI could therefore worsen chronic injury.

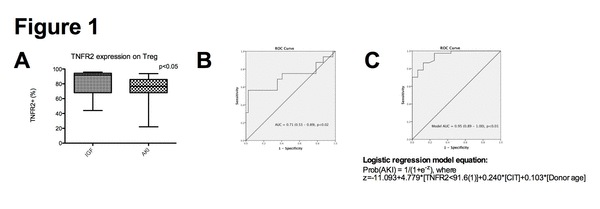

Methods: 53 consecutive deceased donor kidney transplant recipients were divided into AKI (n=37) and immediate graft function (IGF, n=16) groups based on post-transplant dialysis and 24-hour serum creatinine. Donor, organ procurement, and recipient characteristics were similar between both groups except for donor age and cold ischemic time (CIT). Pre-transplant recipient peripheral blood TNFR2 expression was quantified by flow cytometry, gating on CD4+CD127- Treg. Treg suppressive function was measured by in vitro suppression of autologous CD4+CD25- CFSE-labeled effector T cell proliferation by CD4+CD25+ Treg.

Results: Pre-transplant TNFR2 expression on Tregs correlated with Treg suppressive function (r=0.38, p=0.02) in a subset of 37 recipients, and was decreased in AKI compared to IGF recipients (p<0.05; Fig. 1A). It also accurately predicted AKI in ROC curve analysis (AUC=0.71, p<0.02; Fig. 1B) with a sensitivity of 97.3% and a specificity of 56.3% at a cut-off value of 91.6%. This cut-off value remained a significant predictor of AKI in multivariate logistic regression (OR=119, p<0.01) adjusting for dissimilar variables between our groups (donor age, CIT). Combining TNFR2 expression on Tregs with donor age and CIT to form a logistic regression model improved the predictive accuracy for AKI (AUC=0.95, p<0.01; Fig. 1C).

Conclusion: TNFR2 expression on Tregs is a rapid surrogate marker of Treg suppressive function, and predicts AKI pre-transplant independent of or in combination with donor age and CIT. Its measurement could further guide organ allocation to prevent AKI.

Methods: Human peripheral blood mononuclear cells (Cedarlane) were cultured and pre-treated with 2ME2 overnight (18H) before activation with Cell Stimulation Cocktail (4H) (eBioscience). The cultured medium was collected for ELISA assays and whole-cell-lysates were collected for western immunoblotting. Five day cultured cells were stained with CellTrace Violet proliferation dye for flow cytometry (FCM). Live-cell and fixed-cell imaging was performed using a LSM-510 confocal microscope (Carl Zeiss) with Tetramethyl-rhodamine-methylester-stain (TMRM) (10nM, Molecular Probes) and TUNEL assay. Annexin V/PI assays was analyzed by FCM.

Results: Markers of T-cell activation, TNF-α (p=0.0025) and IFN-γ (p=0.0216) levels in 2ME2 treated activated T-cells were reduced relative to controls. As well, when compared to controls, activated T-cell proliferation was significantly blunted upon treatment with 2ME2, with a observed 10% decrease in apoptosis, no change in necrotic events, and no decrease in mitochondrial membrane potential or caspase-9 activity. These results collectively suggest that 2ME2 is independent of a mitochondrial-mediated apoptotic mechanism.

Conclusions: Our study is the first to show that 2ME2 is able to decrease the immune response of activated T-cells in a rejection setting. The mechanism by which 2ME2 modulates its anti-rejection effects is related to its ability to induce cell-cycle-arrest by a cellular senescence phenomenon that warrants further investigation. As 2ME2 has a low side-effect profile, it may be a possible oral-immunomodulatory adjunctive therapy for individuals undergoing solid organ transplantation.

2 Division of Dermatology, Department of Medicine, University of Alberta

3 Department of Surgery, University of Alberta

4 Department of Medicine, University of Alberta

5 Department of Laboratory Medicine and Pathology, University of Alberta

METHOD: We conducted a retrospective study of a 14 year old boy with cystic fibrosis treated with double LTx. The patient underwent LTx at age 10, after which he was maintained on tacrolimus, mycophenolate mofetil and oral prednisone. Pre-LTx, the unusual fungus Blastobotrys was isolated from his airway secretions, appearing again in the chest tube fluid post-LTx. This was treated successfully with IV and nebulized liposomal amphotericin B (L-AmpB), IV caspofungin and 23 months oral voriconazole.

Voriconazole was stopped when the patient developed severe photosensitivity, despite the use of sunscreen. However, 3 months later the patient developed an aspergillus airway infection which again required treatment with (IV) voriconazole, along with IV caspofungin and nebulized L-AmpB. Prophylactic oral voriconazole was continued for 1 year, along with lifelong nebulized L-AmpB. During this time, the patient developed numerous large irregular lentigines on sun exposed areas and signs of accelerated skin ageing. At 44 months post LTx, he developed a verrucous lesion near his lower left eyelid.

RESULTS: . Skin biopsy found well differentiated squamous cell carcinoma, which was successfully treated with surgical excision.

CONCLUSION: Skin cancer is a rare occurrence in pediatric patients who have undergone solid organ transplantation. Severe photosensitivity due to voriconazole and insufficient sun protection predisposed our patient to develop squamous cell carcinoma. Excision of the lesion was curative. When voriconazole is used post-transplant, it should be discontinued once a patient's risk of fungal disease diminishes. Sunscreen alone is insufficient to protect against sunburn. Exposed skin areas should be well covered. Early reporting and regular examinations by a dermatologist are recommended.

2 University of British Columbia, Vancouver, BC

3 Erasmus MC Medical Center, Rotterdam, Netherlands

Methodology: Thymuses (n=5) were obtained during pediatric cardiac surgery. After mechanical dissociation, CD25+ thymocytes (TC) were isolated by magnetic cell separation and expanded with α-CD3, IL-2, rapamycin and artificial APCs. After 7 days, rapamycin was removed; cells were further cultured without (CD25+ TCexp) or with IL-12 (CD25+ TCexp.IL12). CD25-depleted cells were controls. Phenotype was defined by flow cytometry. Stability of FOXP3 expression was assessed by analyzing the methylation status of the Treg Specific Demethylated Region (TSDR) within the FOXP3 gene. Expanded cells were co-cultured with α-CD3/CD28-stimulated PBMC to determine suppressive capacity, analyzing proliferation and IL-2 production by flow cytometry and ELISA, respectively.

Results: Isolated CD25+ TC were FOXP3+Helios+CTLA-4+PD-1dimTGF-β-. After culturing, CD25 + TCexp expanded 11 to 59-fold with high viability (79-97%) and maintained high FOXP3 expression. The TSDR was demethylated in 82-97% of the cells. IFN-γ and IL-2-producing cells were infrequent (0.5-7% and 1-4%, respectively). CD25+ TCexp.IL12 showed a higher expansion capacity (26 to 107-fold; p=0.06); viability (84-96%), FOXP3 expression and the methylation status of the TSDR (% demethylated: 89-100%) were comparable with CD25+ TCexp. In contrast to controls, addition of IL-12 did not increase frequencies of IFN-γ and IL-2-producing cells within CD25+ TCexp.IL12 (2-6% and 1-4%, respectively). TGF-β was upregulated on both CD25+ TC exp and CD25+ TCexp.IL12. Both CD25+ TCexp and CD25+ TCexp.IL12 potently suppressed proliferation and IL-2 production by PBMC even at a 1:10 ratio of Tregs:PBMC.

Conclusion: Expanded CD25+FOXP3+ Tregs isolated from pediatric thymuses maintain stable phenotype and function under inflammatory conditions, including stable FOXP3 expression, absence of IFN-γ-producing cells and potent suppressive capacity. These results indicate that discarded thymuses are potentially an excellent source of Tregs for cellular therapy.

2 Multi-organ Transplant Program, University Health Network, Toronto

Methods: A retrospective study of a child with CF and Blastobotrys species (Btbs), an emerging fungal pathogens, pre- and post-LTx.

Results: A 9 year old boy with CF and severe bilateral bronchiectasis inhaled soil particles while playing in a pit. He lost 450cc FEV1(31% predicted). His sputum persistently grew Pseudomonas and 2 strains of Btbs confirmed by a bronchoalveolar lavage culture. He developed severe mucus plugging, permanent bilateral lower lobe collapse and oxygen dependency requiring nocturnal BiPAP, nebulized pulmozyme and hypertonic saline. He had severe sinus disease on CT. Based on susceptibility results he was commenced on antifungal therapy: IV caspofungin, nebulized liposomal amphotericin (L-AmB) and oral voriconazole. Sinus aspiration pre LTx isolated no Btbs. He received double LTx 14 months later. The explanted lung showed Btbs species on microscopy and culture but no angioinvasive disease. The airways were filled with neutrophils. Post LTx, he received Tacrolimus, Cellcept, prednisone but no induction immunosuppression. He received 2 weeks IV antibiotics and 3 weeks IV L- AmB. Fluid from the right chest tube grew Btbs. He was extubated on day 3, chest tubes were removed on day 9, discharged from PICU on day 10 and home on day 36.

Post LTx, the patient received 3 months nebulized L- AmB, 9 weeks IV caspofungin and 22 months oral voriconazole. He had 1 grade 3 rejection successfully treated with IV pulse steroids. Chest HRCT found shadows in the peripheral right lower lobe and pleura that improved over 12 months. BAL (9) and transbronchial biopsies (7) found no evidence of fungus post LTx. Sinus CT findings were unchanged. He was well with a FEV1 of 75% and no evidence of Btbs found on BAL and lung biopsies 2 years post LTx. He was successfully treated at 28 months post-LTx for an episode of Aspergillus airway sepsis. He was put on life-long nebulized L-AmB. His FEV1 was 65% at 57 months post-LTx.

Conclusions: With aggressive combination antifungal therapy, Btbs was treated successfully with long term survival post-LTx.

Methods: Pediatric solid organ transplant recipients enrolled in our transplant Biobank from 2010-2013 were included. Medical records were reviewed for clinical, demographic, and medical history. Patients undergoing re-transplants were ineligible.

Results: Thirty-seven patients were included (21 heart, 15 liver, 1 kidney). During the first 48h after tacrolimus initiation (median starting dose, 0.09mg/kg), methylprednisolone (n=5) and amlodipine (n=5) were the most frequently used CM, followed by lansoprazole (n=3), fluconazole (n=2), nifedipine (n=2), and amiodarone (n=1). The median circulating tacrolimus level at 36-48 hours post drug initiation was 7.9 ng/mL (range 0.9–29.2 ng/mL). Among patients receiving no CM known to interact with tacrolimus, 15/28 (54%) experienced out-of-range levels during the first 48h after tacrolimus initiation. In contrast, among patients receiving at least one CM, 8/9 (89%) experienced out-of-range levels (p=0.06). The median tacrolimus dose at 48 hours was 0.21 mg/kg for patients with ≤3 CM vs 0.35 mg/kg for >3 CM (p=0.14). Results from the larger biobank cohort are being analyzed.

Conclusion: M edications that interact with tacrolimus may influence tacrolimus drug levels attained after drug initiation. The presence of such CM should be considered in the choice of starting tacrolimus dose.

2 Matthew Mailing Centre for Translational Transplantation Studies, London Health Sciences Centre; Depts. of Pathology, Western University

3 Matthew Mailing Centre for Translational Transplantation Studies, London Health Sciences Centre; department of Medicine, Western University

2 Transplant and Regenerative Medicine Centre, Hospital for Sick Children, Toronto; Department of Psychology, Hospital for Sick Children, Toronto

3 Department of Rehabilitation, Hospital for Sick Children, Toronto

4 Transplant and Regenerative Medicine Centre, Hospital for Sick Children, Toronto; Department of Pediatrics, University of Toronto, Toronto

Methods: Participants were 3 - 6.99 years of age, >1 year post isolated LTx with age at LTx < 2 years. Control patients had chronic cholestatic liver disease (LD), still with native liver. All participants completed a range of neurocognitive and motor tasks, with parent and teacher questionnaires.

Results: A total of 18 participants, 13 LTx (mean age 4.69 years) and 5 LD (mean age 4.49 years) completed the testing battery. Indications: biliary atresia (17 participants) and alpha-1 antitrypsin (1 control). Overall intellectual abilities fell within the age expected range for both groups, but LTx participants performed significantly poorer on two tasks assessing visual construction (p= .037) and processing speed (p=.067). LTx patients had a greater frequency of below average scores on visual perception (29 % vs .8% for LD) and motor coordination (24% vs .8% for LD). Parent and teacher reports indicated greater concerns among the LTx group for mood (e.g. low mood, anxiety), quality of life and adaptive behaviour skills.

Conclusions : Pre-school children with LTx before 2 years of age were within population norms across many domains, but demonstrated challenges in the areas of visual and motor functioning, with perceived problems of executive, emotional and adaptive functioning. These potential challenges may compromise early academic and functional skills acquisition. Thus, routine early neurodevelopmental assessment is vital among preschool aged children post LTx to establish appropriate early educational and rehabilitation supports.

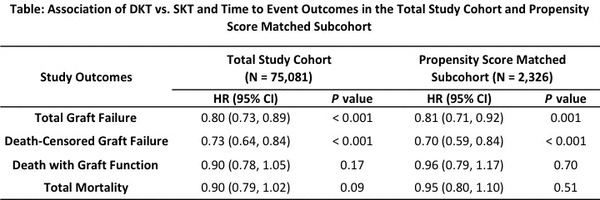

METHODS: This is a cohort study of all incident adult deceased donor kidney transplant recipients from 1 Jan 2000 to 31 Dec 2010 (followed until 31 Dec 2011) using the Scientific Registry of Transplant Recipients. The relation between DKT status and delayed graft function (DGF) was quantified using logistic regression models. Cox proportional hazards models were fitted to determine the association of DKT status with total graft failure, death-censored graft failure, death with graft function, and total mortality. To compare outcomes of DKT and SKT recipients with kidneys of similar quality, we used propensity score (PS) matching to balance all measured confounders.

RESULTS: The total study cohort and PS subcohort comprised of 75,081 (SKT 73,911, DKT 1,170) and 2,326 (SKT 1,163, DKT 1,163) patients, respectively. In the total study cohort, DKT recipients were older, more likely diabetic, and had longer cold ischemia times. DKT donors were also older, had lower pre-terminal kidney function, and were more likely expanded criteria donors. DKT (vs. SKT) was associated with a decreased adjusted relative odds of DGF in the total study cohort (OR = 0.69 [95% CI: 0.60, 0.80]). DKT showed a protective effect on graft failure endpoints but no significant effect on mortality endpoints (see Table). The protective effect of DKT was significantly diminished in patients with DGF. Similar findings were observed in the PS subcohort.

CONCLUSIONS: DKT is associated with reduced rates of DGF and graft failure vs. SKT of similar quality. The occurrence of DGF may modify the protective effect of DKT on graft outcomes. The potential impact on life expectancy from transplanting two SKTs vs. one DKT using kidneys with qualities similar to those in this cohort requires further study.

2 Toronto Lung Transplant Program, University Health Network

3 Department of Physical Therapy, University of Toronto and Toronto Lung Transplant Program, University Health Network

4 St John’s Rehab Hospital, Department of Clinical Research

5 Graduate Department of Rehabilitation Science and Department of Physical Therapy, University of Toronto

Introduction: Upper limb muscle size, strength and exercise capacity have not been objectively assessed in lung transplant (LTx) candidates. The objectives of this study were to (1) compare upper limb muscle size, arm exercise capacity and muscle strength in a cohort of LTx candidates to healthy control subjects; and (2) examine predictors of arm exercise capacity in LTx candidates.

Methods: Thirty-four LTx candidates enrolled in a pulmonary rehabilitation program (60 ± 8 years; 59% males) with the following diagnoses (IPF=26, COPD=6, Bronchiectasis=2, Bronchiolitis Obliterans=1, Bronchoalveolar Carcinoma=1) and 12 healthy control subjects (56 ± 9.5 years; 50% males) were included in the study. All subjects underwent measures of biceps muscle thickness and muscle strength of the elbow flexors using ultrasound and a hand held dynamometer (HHD), respectively. Subjects also performed the 6-minute walk test (6MWT) and the unsupported upper limb exercise test (UULEX).

Results: Biceps muscle thickness was 10% smaller in LTx candidates when compared with healthy controls but did not reach statistical significance (2.54±0.39 vs. 2.80±0.33cm2; p=0.06). Elbow flexion strength of LTx candidates was 47% lower than controls (177.4±74 vs. 260.7±79 Newtons; p=0.01). LTx candidates had a shorter time to fatigue in the UULEX compared to controls (553.6 vs. 702sec; p<0.01). A moderate correlation was found between biceps muscle thickness and strength (r=0.60, p<0.01). Muscle strength also correlated with UULEX (r=0.58, p <0.01) and percent predicted 6MWT (r=0.46; p<0.01) in LTx candidates. Muscle strength was the only significant predictor of the UULEX, (b=0.59, p<0.01).

Conclusion: Although the LTx candidates were actively participating in rehabilitation, upper limb muscle strength and exercise capacity were impaired, thus specific training strategies may be required pre- and post-transplant to target improvements in upper limb function.

2 Université Paris Descartes and Hôpital Necker, Paris, France

3 Guy’s Hospital, London, UK

4 Toulouse University Hospital, Université Paul Sabatier and Institut National de la Santé et de la Recherche Médicale, Toulouse, France

5 The Queen Elizabeth Hospital, Woodville, Australia

6 Leiden University Medical Centre, Leiden, The Netherlands

7 Puget Sound Blood Center, Seattle, WA, USA

8 Alexion Pharmaceuticals, Inc., Cheshire, CT, USA

Methods : In a multicenter, open-label, phase 2, single-arm study of adult (≥18 years) candidates for deceased donor kidney transplantation with donor sensitization (N=47), select enrollment criteria were: stage V chronic kidney disease; prior exposure to human leukocyte antigens; presence of anti-donor antibodies by Luminex® and/or B- or T-cell positive cytometric flow crossmatch with negative CDC at transplantation; no ABO incompatibility, previous eculizumab treatment, or history of severe cardiac disease, splenectomy, bleeding disorder, or active infection. Patients received eculizumab (1200 mg on day 0 before reperfusion; 900 mg on days 1, 7, 14, 21, 28; 1200 mg at weeks 5, 7, 9) and induction/maintenance immunosuppressants. The primary endpoint was treatment failure rate (biopsy-proven AMR, graft loss, patient death, and/or loss to follow-up) at week 9 post-transplantation. Secondary endpoints included mean serum creatinine levels and treatment-emergent adverse events (TEAEs). Preliminary results are reported.

Results : Patients (mean age, 49.8 years) had a mean (SD) of 2.3 (1.6) DSAs and a mean (SD) total DSA median fluorescence intensity of 5,873 (4,675). Five patients (10.6%) met criteria for week 9 post-transplantation treatment failure (biopsy-proven AMR, n=3; graft loss and/or death, n=2). Mean serum creatinine level at month 3 was 1.74 mg/dL. Conclusion: Eculizumab appeared to be a safe and effective prophylaxis against early acute AMR in sensitized recipients of deceased donor kidney transplants.

2 Department of Laboratory Medicine and Pathology, University of Alberta, Edmonton, Alberta, Canada Provincial Laboratory for Public Health (ProvLab), Edmonton, Alberta, Canada

Methods: We developed an assay combining DNase I digestion and quantitative real-time PCR (CMV-QPCR) to differentiate CMV free DNA from encapsidated virions in plasma samples. The Qiagen DNA mini kit was used for viral DNA extraction and CMV-QPCR assay for quantitation. The amount of CMV free DNA was determined by subtracting virion DNA (after digestion) from total DNA (without digestion); DNase I degrades all free viral DNA but not encapsidated CMV virions. 104 serial CMV DNA positive stored plasma samples collected from 20 SOT patients with different pre-transplant CMV risk serostatus (10 = donor (D) +/recipient (R) -, 7 = D+/R+, 2 = D-/R+, and 1 = D-/R-) were tested.

Results: The optimized assay achieved 99.99%-100% efficiency in spiked CMV DNA degradation in plasma samples diluted 1:4 Non-encapsidated free DNA was the primary biologic form of CMV in plasma, representing 98.82%-100% of all detectable CMV DNA in all samples. CMV virions were not detected in 88 samples (84.6%) but found at extremely low levels (≤0.5% virions) in 16 samples (15.4%).